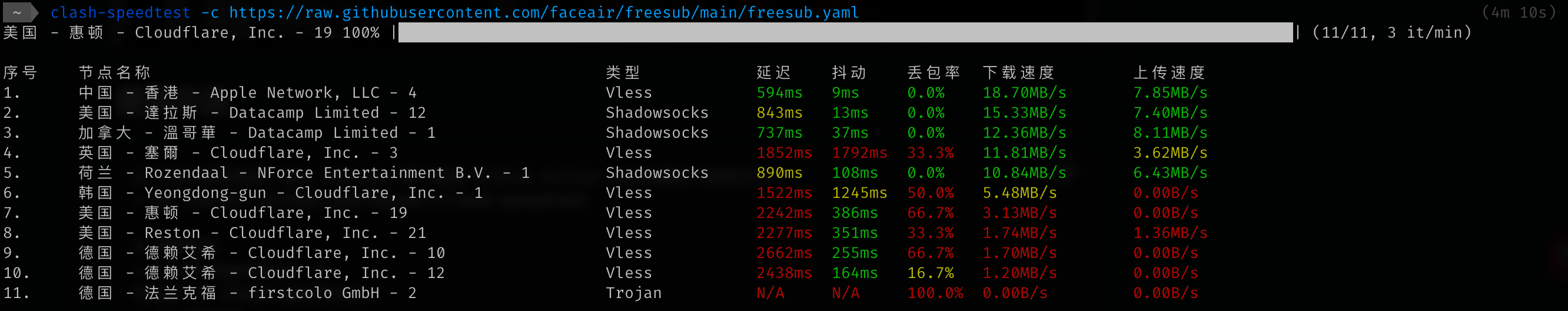

分享一下最近用的一个clash-speedtest

原版:faceair/clash-speedtest

二次开发:gyyst/clash-speedtest

原本是想要寻找一个测试节点速度,纯净这样的软件,subs-check太重了一点。

就找到了新的clash-speedtest,比较符合我的使用需求。

并发设置(这个有好有坏,可能网络带不动)

输出格式改为URI的格式,更符合我的需求(URI这个目前测试就是vless,hy2,ss,其他格式暂未测试)

节点注释的标准化

仅测试延迟的fast模式

测试流媒体解锁的功能

根据不同字段排序的功能

最后一个小彩蛋:

可以白嫖github actions进行测速

name: Speedtest Proxyz Node

on:

workflow_dispatch:

schedule:

# 每天中国时间晚上8点执行(可自定义cron表达式)

- cron: "0 12 * * *"

jobs:

speedtest-proxy:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Set up Python 3.9

uses: actions/setup-python@v4

with:

python-version: 3.9

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install requests

- name: Download and extract clash-speedtest

run: |

mkdir -p proxy

wget https://github.com/gyyst/clash-speedtest/releases/latest/download/clash-speedtest_Linux_x86_64.tar.gz -O clash-speedtest.tar.gz

tar -xzf clash-speedtest.tar.gz -C proxy/

rm clash-speedtest.tar.gz

- name: Make clash-speedtest executable

run: |

chmod +x proxy/clash-speedtest

- name: Run clash-speedtest

env:

TEST_URL: ${{ secrets.TEST_URL }} # 从仓库Secrets读取TEST_URL

run: |

proxy/clash-speedtest -c $TEST_URL -output proxy/result.txt -concurrent 8 -max-latency 1000ms -min-download-speed 5 -min-upload-speed 0.5

- name: Run filter script

env:

SUB_URL: ${{ secrets.SUB_URL }} # 从仓库Secrets读取SUB_URL

run: |

python proxy/filter.py

至于python脚本内可以写一下写入汇聚订阅的地址,例如CM大佬的汇聚订阅

cmliu/CF-Workers-SUB

import requests

import os

import sys

from urllib.parse import urlparse

# 从环境变量获取URL并解析为host和path

url = os.getenv('SUB_URL')

parsed_url = urlparse(url)

host = parsed_url.netloc

path = parsed_url.path

# 从result.txt读取内容作为payload

# 使用os.path获取绝对路径,确保在不同环境下都能正确找到文件

with open('proxy/result.txt', 'r', encoding='utf-8') as f:

payload = f.read().strip()

headers = {

'authority': host,

'method': 'POST',

'path': path,

'scheme': 'https',

'accept': '*/*',

'accept-language': 'zh-CN,zh;q=0.9',

'cache-control': 'max-age=0',

'origin': f'https://{host}',

'priority': 'u=1, i',

'referer': url,

'sec-ch-ua': '"Chromium";v="134", "Not:A-Brand";v="24", "Google Chrome";v="134"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/134.0.0.0 Safari/537.36',

'content-type': 'text/plain;charset=UTF-8',

'Host': host,

'Connection': 'keep-alive'

}

# 检查 URL 是否为空

if url is None:

raise ValueError("URL cannot be None")

response = requests.request("POST", str(url), headers=headers, data=payload)

print(response.text)